Argonne takes a lead role in solving dark energy mystery

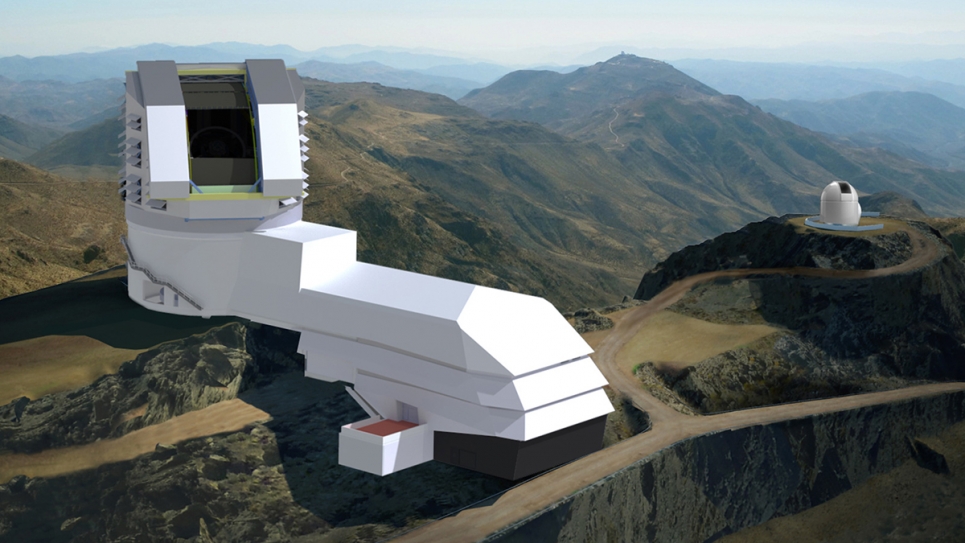

The Large Synoptic Survey Telescope (LSST) rests atop El Peñón, an 8,800-foot mountain peak along the Cerro Pachón ridge among the foothills of the Andes in northern Chile. It keeps good company with the closely situated Gemini Observatory-South and the Southern Astrophysical Research Telescope.

A partnership between the U.S. Department of Energy’s (DOE) Office of Science and the National Science Foundation (NSF), the LSST is among a new generation of ground-based telescopes supported by advanced supercomputing and data analysis tools, some of them provided by DOE’s Argonne National Laboratory.

Scheduled to begin its observations in 2021, the LSST’s 8.4-meter (27.6-foot) primary mirror, coupled with the world’s largest digital camera, will capture a continuous stream of images and generate massive datasets of roughly half the visible sky every night.

To prepare for this flood of data, an ambitious campaign is underway to create simulated LSST data that will test image analysis techniques and ensure that analysis on actual images is accurate, opening the door to pivotal discoveries of the cosmos.

Perhaps, from within the analyses results will emerge the clues to solving the enigma of dark energy and its relationship to the accelerated expansion of the universe, a riddle that has confounded science for nearly a century.

Believed to comprise roughly 70 percent of the universe, dark energy and its role in cosmic expansion form one of five interconnected areas of science supported by the Office of High Energy Physics within DOE’s Office of Science.

The LSST’s mission to unlock the mystery falls to its Dark Energy Science Collaboration (DESC), an international collaboration of more than 800 members, many from DOE facilities like Argonne, Brookhaven National Laboratory, Lawrence Berkeley National Laboratory, SLAC National Accelerator Laboratory and Fermilab.

Shedding light on dark energy

“We already know that understanding dark energy will be very difficult,” said Katrin Heitmann, Argonne physicist and computational scientist and Computing and Simulation Coordinator for the DESC. “But the results of this research could shed new light on the physical nature of dark energy and further our understanding of the universe and its evolution.”

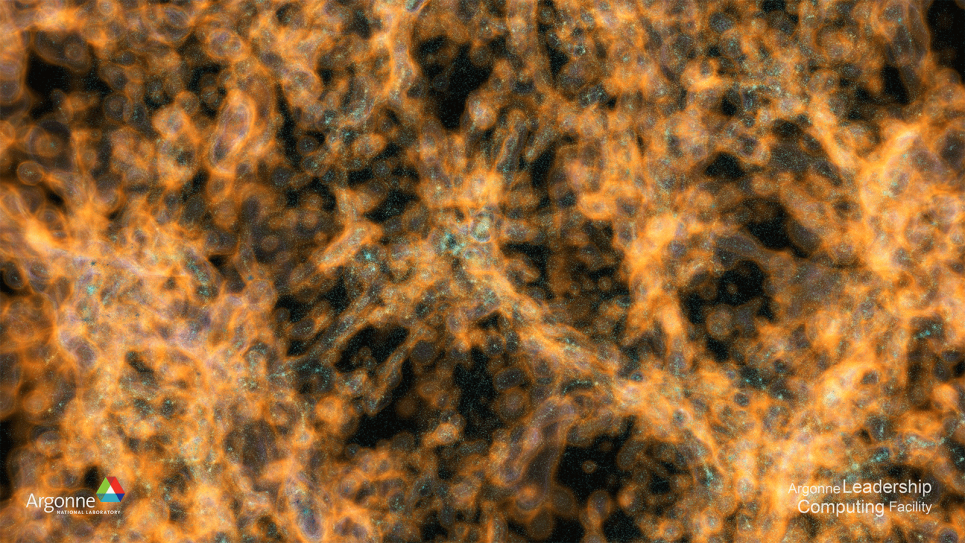

The picture is not entirely clear, but there is very strong evidence that galaxies are intimately bound to large clumps of dark matter, an unidentified form of matter five times more abundant than the visible matter in the universe. The dark matter distribution, initially very smooth, forms a complex web-like structure made of dark matter concentrations — non-luminous “halos” — in which galaxies are eventually formed. Understanding the distribution and its evolution may one day inform our understanding of dark energy.

“By applying this knowledge of dark matter and galaxies together, we can create a powerful tool to probe how the universe evolves over time and get a sense for how dark energy might fit into current cosmological models,” said Antonio Villarreal, a postdoctoral appointee at Argonne’s Leadership Computing Facility (ALCF), a DOE Office of Science User Facility.

He and more than 50 researchers from the DESC’s computing and analysis working groups are running a dress rehearsal of sorts to ready the stage for such discovery. Their set, a massive simulation intended to accurately reflect data generated by the LSST; the props and scenery guided by the composition of galaxies and optical effects created by the atmosphere, the Milky Way, even the telescope itself.

The first scene opens on the “Outer Rim.”

A key player in this scenario, Heitmann was among a cohort of researchers utilizing the ALCF to produce the “Outer Rim” simulation, among the largest high-resolution simulations of the universe in the world. A cosmological canvas on which to describe galaxy models, the Outer Rim simulation tracks the distribution of matter in the universe as governed by the fundamental principles of physics, as well as input from earlier sky surveys.

Having served as the backdrop for other studies, the Outer Rim simulation is now the first step in an end-to-end pipeline of simulation and analysis that will prepare the way for the actual LSST data.

“We start the simulation at something like 50 million years after the Big Bang and we evolve it forward in order to provide cosmological surveys, like the LSST, with a map of the matter distribution in the universe, which is the backbone for modeling the galaxies that they will observe,” said Heitmann, who organizes the computational efforts within the collaboration.

Then, like artists animating a complicated scene in some computer-generated animated film, an Argonne-led DESC team populates this background with galaxies of all sizes, shapes and colors.

Quantitative criteria provided by the DESC’s analysis working groups help guide some of these decisions based on the eventual requirements of the science teams that will study the LSST results.

“The analysis working groups help define science priorities, like which galaxy populations are important to simulate in order to test our ability to measure dark energy, what properties do they need to have and how accurately should they represent real galaxies,” said Rachel Mandelbaum, associate professor of physics at Carnegie Mellon University and DESC analysis coordinator.

Informed by dark matter halos or the clustering of dark matter throughout the Outer Rim simulation, a code called GalSampler also tells modelers roughly the types of galaxies expected to reside in specific regions or what a galaxy should look like — blue represents young galaxies that burst with star formation; older galaxies burn red in their final throes; and our own Milky Way is a green valley galaxy in its middle age, described Andrew Hearin, assistant physicist in Argonne’s High Energy Physics division.

The results are catalogs that serve as road maps to where every galaxy lives, providing scientists with a frame of reference as they focus the telescope on a particular slice of the sky.

Supercomputing sets the stage

This massive undertaking requires equally massive computing resources, the kind that DOE Office of Science User Facilities like the ALCF and the National Energy Research Scientific Computing Center (NERSC) provide. Heitmann and her colleagues routinely leverage thousands of nodes of DOE supercomputers for their simulations.

Working through the ALCF Data Science Program (ADSP) — an initiative targeting big data problems — the team is integrating codes developed by the collaboration into tools that will manage workflow on the Theta and Cori systems at ALCF and NERSC, respectively.

For example, the Parsl workflow tool, developed jointly by Argonne and the University of Chicago, facilitates use of the image simulation code imSim, which models for just about everything that could impact the camera and how it will look in an actual LSST image.

“The code has to take absolutely every individual photon of light from a distant galaxy and figure out how that would map onto the image sensors of our camera,” explained Villarreal, who is leading the effort to integrate numerous and varied codes.

But it’s a very long way between the foothills of the Andes and far distant galaxies, and there is much to distract a photon on its way to the telescope lens. Earth’s atmosphere and dust from the Milky Way can skew the accuracy of observations, just as light from the sun and the moon can make it harder to observe nearby or fainter objects. Even defects in the camera’s image sensors can mimic physics effects.

One specific example of how these effects can influence real data is called blending, where several galaxies in an image might appear to overlap because the Earth’s atmosphere and the telescope and instrumental effects cause their light to smear together, making it hard to tell whether there is one oddly shaped galaxy or two blended galaxies.

“Disentangling how much comes from one galaxy or another is a very hard problem, so we want to test the algorithms for doing that,” said Carnegie Mellon’s Mandelbaum. “That means we need to make sure that the galaxy catalogs that go into the simulations have roughly the right number of galaxies so that the level of this blending effect is realistic.”

After all of these imperfections are introduced, the resulting “raw” images are handed off to the computational infrastructure working group, which will coordinate efforts to process the simulated data through the LSST’s Data Management (DM) stack, part of the pipeline that will eventually prepare the real images for analysis.

The role of the DM stack is to undo all the defects that were introduced into the images. By determining whether the process can account for the multifarious effects and then reverse engineer them — take out cosmic dust and telescope defects — they can validate the pipeline’s integrity.

“Once they run the raw images through the data processing pipeline, they take the data and try to tell me what parameters were put into the initial conditions,” said Heitmann. “If they get the answer right, then we know that the processing worked correctly. If not, then they tell us that the input parameters were x, when they were actually.”

The results of the clean data set finally go to the analysis working groups overseen by Mandelbaum, the end of the pipeline for this huge data challenge. The process will test analysis algorithms in preparation for the real data, progressively extracting and analyzing finer details derived from the galaxy catalogs — such as the gravitational influence of dark matter — to assure that they can accurately recover the properties of dark energy.

Epilogue

The script is complete, the players know their parts. The DESC community is ready for opening night, when the universe will open itself to astrophysical review by the LSST. Flaws will be fixed, parts fine-tuned and details will emerge that might move observers to awe.

If all is performed as planned, the catalog of galaxies created by the LSST DESC will help shine light on the darker nature of the universe and, in so doing, match observation to prediction and explain how the curtains might fall on the cosmic stage.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit the Office of Science website.