Untangling dark forces with computing power

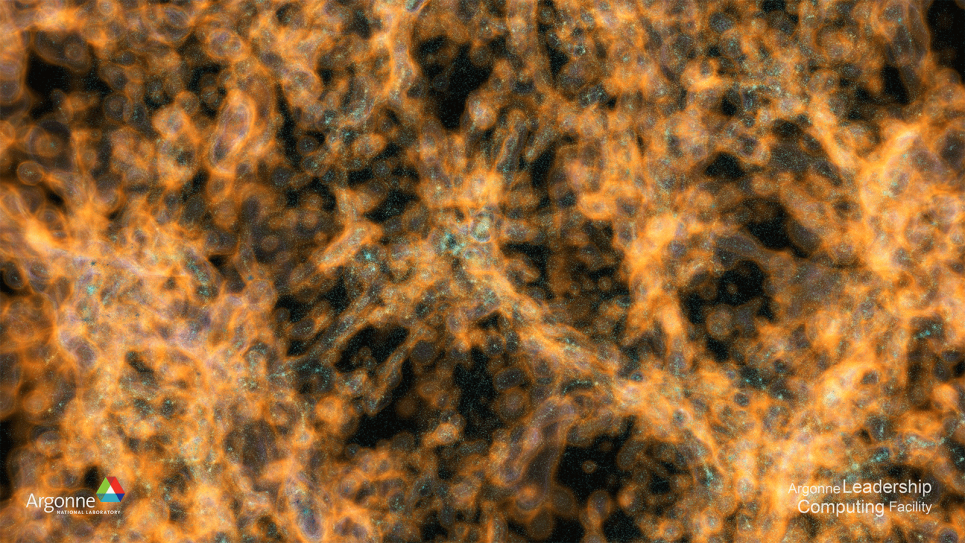

Researchers use supercomputers like Aurora to build realistic models of the universe that allow them to investigate hundreds and thousands of possible scenarios by running their own virtual universes.

Virtual universes are examples of digital twins, sophisticated models of complex systems like the Earth’s climate or the spread of disease. Scientists use them to make fairly accurate predictions about a system’s evolution and as a tool for understanding the underlying issues related to unexpected events that occur within those systems — anomalies. It is these irregularities or inconsistencies in a model that could lead researchers to a discovery.

Currently, it is assumed that dark matter, whatever it is, doesn’t interact with itself or visible matter, but it does interact gravitationally, just like everything else. Yet even this limited understanding still presents several scenarios in which the presence of dark matter might be detected and its properties investigated.

If, in fact, dark matter is self-interacting, such interactions could alter gravitational dynamics. For example, you can track the orbits of stars near the center of a small galaxy to determine whether the pattern is different from what you would predict through gravity alone.

And because it is dynamic, researchers can simulate it.

“If you give me a model for how the dark matter interacts with itself, I can put that in my simulation code, and I can work out what happens,” says Habib. “I can predict at small scales how the mass distribution will change.”

Because a compelling model of dark matter interactions does not yet exist, researchers can run and rerun different models many times, changing parameters along the way. The process continues until a model is achieved that closely aligns with observation and provides some inkling of the nature of the interactions.

That example is just one way of investigating the nooks and crannies of the vast space of cosmological models, notes Habib. Such a process would have taken years using Aurora’s predecessors — powerful supercomputers in their own right and in their own time. But Aurora’s exascale computing power, coupled with integrated AI and statistical methods, dramatically reduces the number of simulations and time needed to get results.

For example, AI is powering a technique called emulation to tackle inverse problems — that is, problems where the goal is determining the cause or system properties from observed effects or data. The new technique tries to match simulations to observations and measurements of some cosmological feature or dynamical property. Until recently, it could take thousands of simulations to explore parameters that might solve a particular problem.

Emulation is a powerful machine learning-based statistical approach that requires only a fraction of that number of simulations to determine parameters that best fit a set of observations.

“So, the point is, we can make the process way more efficient,” says Habib.

This is a huge advantage, especially when modeling cosmos-encompassing scenarios, like the expansion of the universe and the role played by dark energy.

“Dark energy is more subtle than dark matter,” he says, “because there are no small-scale dynamical factors associated with it. And because it is affecting the expansion rate of the universe, dark energy is really changing how the universe is behaving on very large scales.”

To get a better handle on the magnitude of the problem, researchers first must measure both the expansion rate of the universe and the speed at which galaxies are moving away from each other. These calculations require the development of extremely large and computationally costly sky maps to virtually visualize and manipulate the forces at work.

And just as with dark matter, researchers can simulate different models of dark energy using infinite variations of this force, changing parameters until the models begin to agree with observation.

One trajectory of models requires going back to the early stages of the universe to measure its expansion rate and then figure out whether it has changed over time.

“And if so, does it correspond to what you would expect, or is it different?” asks Habib. “It turns out that the differences are fairly subtle. But part of the reason is that the current model of dark energy is very simple.

“So, at the moment, we’re trying to make more and more accurate predictions for the model with the idea that, if you find the measurements to be at variance with what you’re predicting, then you know there’s something wrong,” he continued. “It won’t tell you what the right answer is, but it tells us that the simple model we have is incorrect. So, then we can develop new ones.”

Rinse and repeat. Thousands, perhaps millions of times.

Illuminating the dark universe

The team will leverage Aurora’s immense computing power to perform massive simulations of the cosmos, helping to advance the arena of computational cosmology and prepare the way for the effective launch of new telescopes and probes.

The work may return answers as to the nature of dark energy and dark matter, in addition to addressing the conundrum of neutrinos, which remain a perplexing piece of the Standard Model of particle physics. Characterizing the total mass of neutrinos has been a Holy Grail of sorts since experiments revealed evidence of three different kinds or “flavors” of the particle, each with its own mass.

But for Habib, the project affords us much more than an answer to these particular riddles. The use of cutting-edge technologies, particularly in computing, has exciting implications for innovations that we often don’t anticipate.

The work also affirms our long relationship with the sky, and through it, the development of some rational understanding of nature that in the past led to calendars and precision navigation, among other human achievements.

“Fundamentally, what we’re doing right now is simply an extension of that long history of humanity’s connection to the stars, the galaxies and everything else,” he says. “There’s an inherent beauty in the whole thing. I mean, look at how excited people get when they see images from Hubble or the new James Webb Space Telescope. The ability to look deep into the universe is pretty astounding because it also tells us a lot about our own place in the big scheme of things.”