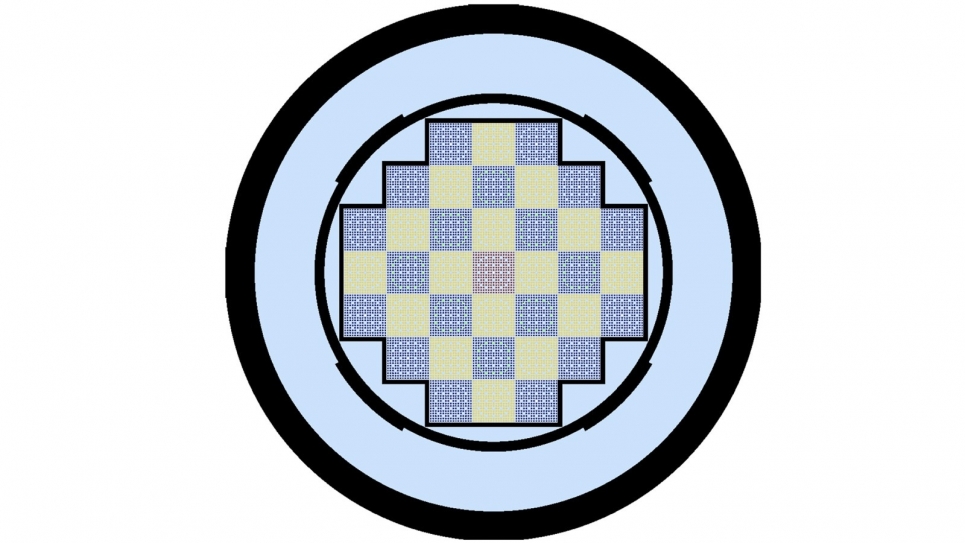

The steady-state turbulent kinetic energy distribution in the nekRS test case.

The team’s research marks an important milestone with the potential to advance efforts to further integrate high-fidelity numerical simulations in actual engineering designs.

Understanding the physical behavior inside an operating nuclear reactor can be done only with simulations on a supercomputer due to the high-pressure, high-temperature, and radioactive environment inside a reactor core.

The whirls and eddies of coolant that flow around the fuel pins play a critical role in determining the reactor thermal and hydraulics performance and give much needed information to nuclear engineers about how to best design future nuclear reactor systems, both for their normal operation and for their stress tolerance.

Large-scale high-resolution models yield better information that can drive down costs to eventually build a new, intrinsically safe nuclear reactor. So computational scientists and nuclear engineers at the U.S. Department of Energy’s Argonne National Laboratory have joined forces to complete the first ever “full-core” pin-resolved computational fluid dynamics (CFD) model of a small modular reactor (SMR) under the DOE’s Exascale Computing Project, ExaSMR.

The ultimate research objective of ExaSMR is to carry out the full-core multi-physics simulations that couple both CFD and neutron dynamics on the upcoming cutting-edge exascale supercomputers, such as Aurora, which is scheduled to arrive at Argonne in 2022. The progress achieved, published April 5 in the journal Nuclear Engineering & Design, marks an important milestone, which will hopefully inspire researchers to further integrate high-fidelity numerical simulations in actual engineering designs.

This image shows the individual pins in a full-core nuclear reactor simulation, one of the first ever done. (Image by Argonne National Laboratory.)

A nuclear reactor core contains the fuel and coolant needed for the reactor to operate. The core is divided up into several bundles called assemblies, and each assembly contains several hundred individual fuel pins – which in turn contain fuel pellets. Until now, limitations in raw computing power had constrained models, forcing them to address only particular regions of the core.

“As we advance towards exascale computing, we will see more opportunities to reveal large-scale dynamics of these complex structures in regimes that were previously inaccessible, giving us real information that can reshape how we approach the challenges in reactor designs,” according to Argonne nuclear engineer Jun Fang, an author of the study, which was published by ExaSMR teams at Argonne and Elia Merzari’s group at Penn State University.

A key aspect of the modeling of SMR fuel assemblies is the presence of spacer grids. These grids play an important role in pressurized water reactors, such as the SMR currently considered, as they create turbulent structure and enhance the capacity of the flow to remove heat from the fuel rods containing uranium.

Instead of creating a computational grid resolving all the local geometric details, the researchers developed a mathematical mechanism to reproduce the overall impact of these structures on the coolant flow without sacrificing accuracy. In doing so, the researchers can successfully scale up the related CFD simulations to an entire SMR core for the first time.

“The mechanisms by which the coolant mixes throughout the core remain regular and relatively consistent; this enables us to leverage high-fidelity simulations of the turbulent flows in a section of the core to enhance the accuracy of our core-wide computational approach,” said Argonne principal nuclear engineer Dillon Shaver.

The strong technical expertise exhibited by the ExaSMR teams is built upon Argonne’s history of breakthroughs in the related research fields, particularly nuclear engineering and computational sciences. Several decades ago, a group of Argonne scientists led by Paul Fischer pioneered a CFD flow solver software package called Nek5000, which was transformative because it allowed users to simulate engineering fluid problems with up to one million parallel threads.

Most recently, Nek5000 has been re-engineered into a new solver called NekRS that uses the power of graphics processing units (GPUs) to accelerate the computational speed of the model. “Having codes designed for this particular purpose gives us the ability to take full advantage of the raw computing power the supercomputer offers us,” Fang said.

The team’s computations were carried out on supercomputers at the Argonne Leadership Computing Facility (ALCF), Oak Ridge Leadership Computing Facility (OLCF), and Argonne’s Laboratory Computing Resource Center (LCRC). The ALCF and OLCF are DOE Office of Science User Facilities. The team’s research is funded by the Exascale Computing Project as part of the Office of Science and the National Nuclear Security Administration.

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.