This year’s program was structured around eight overarching topics: hardware architectures; programming models and languages; data-intensive computing and input-output (I/O); data analysis and visualization; numerical algorithms and software for extreme-scale science; performance tools and debuggers; software productivity and sustainability; and machine learning.

“The event is composed of lectures and accompanying hands-on sessions for most topics,” Loy said. “The hands-on sessions, close interaction with the lecturers and access to large DOE systems, such as those at the Argonne Leadership Computing Facility (ALCF), the Oak Ridge Leadership Computing Facility (OLCF) and the National Energy Research Scientific Computing Center (NERSC), are particularly important features of ATPESC.”

The ALCF, OLCF and NERSC are DOE Office of Science User Facilities.

ATPESC lecturer William Gropp, who is the director and chief scientist at the National Center for Supercomputing Applications (NCSA) and Thomas M. Siebel Chair in Computer Science at the University of Illinois Urbana-Champaign, pointed to some additional benefits of attending the training program.

“ATPESC provides an intense, broad and deep introduction to many aspects of high-performance computing, not just one or two,” Gropp said. “Having direct access to some of the leaders in the field is great for asking questions, getting opinions and talking about careers. The participants also benefit from being part of the ATPESC community with their fellow students.”

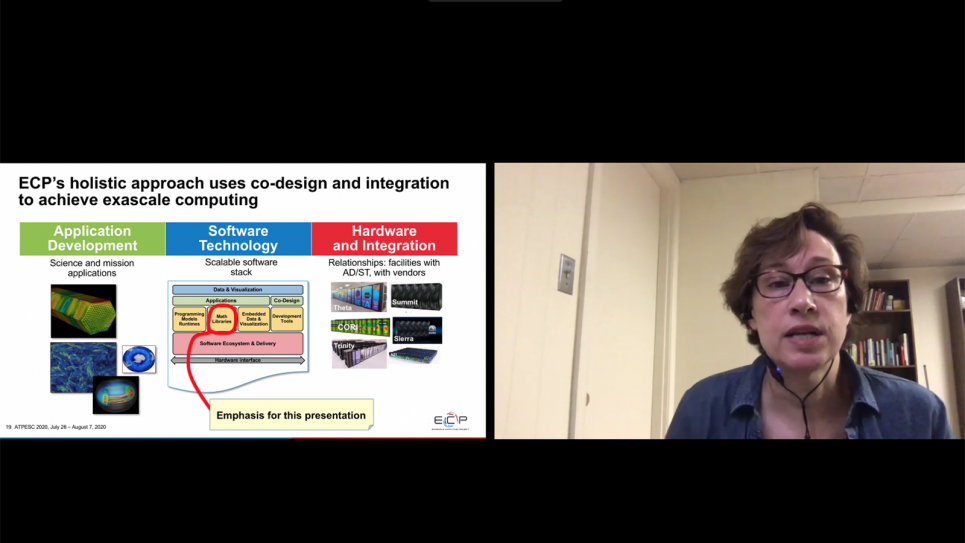

The training program is structured to align with the ECP’s mission to develop a capable computing ecosystem for future exascale supercomputers, including Aurora at Argonne and Frontier at Oak Ridge National Laboratory.

“The ATPESC agenda is always evolving to meet the needs of the next generation of computational scientists. Upcoming leadership-class systems such as Aurora will rely on GPUs (graphics processing units), so we have added new material and re-focused existing material in this area,” said Loy, who also serves as the lead for training, debuggers, and math libraries at the ALCF.

For example, OpenMP content was adjusted to cover new features that leverage GPUs, and a session on SYCL/DPC++, one of the programming models being developed for exascale systems, was added to the program this year.

“The presentation on SYCL/DPC++ by Argonne’s Thomas Applecourt ended up being the most helpful session for me,” said participant Ral Bielawski, a doctoral student in aerospace engineering at the University of Michigan. “He covered a programming model I had never been exposed to before and provided a solution that would target Intel GPUs, and potentially most GPUs in the future.”

To facilitate the virtual format, ATPESC participants viewed lectures via the Zoom video conferencing service. The Slack instant messaging platform was also used for ATPESC for the first time, as a place to post event announcements and communicate via chat both during and after the lectures.

“I’ve found chat to be an effective way to communicate between audience and speaker,” Gropp said. “It also made it easier for the instructors who were not lecturing to answer some of the questions.”

In previous years, ATPESC included dinner talks and optional activities that would sometimes go as late as 9:30 p.m., but the virtual event this year had shorter days to accommodate different time zones and “the fatigue of being in videoconferences” for two weeks, Loy said.

While the virtual event precluded visiting Argonne in person, “there was a virtual tour of the ALCF on Saturday, and one evening a bunch of us got together for a Zoom happy hour,” said participant Kevin Green, a research scientist for the Department of Computer Science at the University of Saskatchewan in Canada.

But it was the program content that will stick with Green and his fellow participants as they look to apply what they learned to their careers moving forward.

“I’ll be using a lot of the ideas I’ve seen here to update material in our high-performance computing courses,” Green said. “I’ve also gotten a good feel for how we can migrate our current code designs to designs that will perform well across different supercomputing architectures.”

For more information, including videos of previous ATPESC lectures, visit https://extremecomputingtraining.anl.gov.

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science