On scales impossible for humans to achieve manually, the next generation of cancer-fighting drugs—along with treatments for other diseases—is being identified molecule by molecule from a vast array of candidate compounds on Argonne National Laboratory’s exascale supercomputer, Aurora.

Housed at the Argonne Leadership Computer Facility (ALCF), a U.S. Department of Energy (DOE) user facility, Aurora’s powerful capabilities have helped researchers overcome computational bottlenecks that arise when assessing certain molecular properties on a large scale.

Such computational power is of vital importance, as high-throughput screening of extensive compound datasets to filter for advantageous properties—especially the ability to interact with relevant biomolecules—represents a promising direction in drug discovery for the treatment of cancer, as well as for response to epidemics like SARS-CoV-2. The team’s machine learning-driven approach offers an efficient means for such screening, which is otherwise cumbersome working with data at these scales.

Indeed, “extensive” understates the number of candidate drugs to be analyzed.

“We’ve been able to successfully screen up to 22 billion drug molecules per hour on Aurora,” said Archit Vasan, an ALCF postdoctoral researcher and lead author of a paper on the successful implementation of his team’s method of drug discovery and its ability to address the problem of extreme-scale compound screening.

SMILES for efficient screening at extreme scales

Traditional structural approaches for assessing the strength of the interaction between two or more molecules, a property called binding affinity, become inefficient when used to analyze data on the massive scales of drug compound databases. Such approaches include a computational simulation technique known as molecular docking.

“Molecular docking is one of the most computationally efficient methods for computing binding affinity, but it still takes around five to ten seconds per compound,” Vasan said. “If you were just screening through thousands of compounds, then it would be okay to take that route.”

To make the molecular docking simulation process more efficient, the researchers turned to machine learning. The researchers devised a workflow to scale model training and inference across multiple supercomputer nodes.

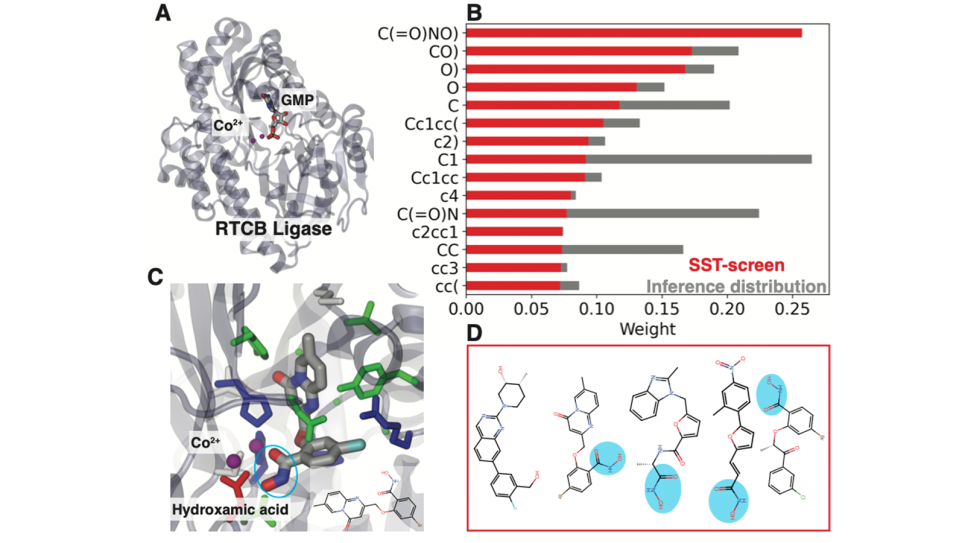

At the workflow’s center is a docking surrogate developed by the team, called the Simple SMILES Transformer (SST), which learns molecular features from the SMILES (Simplified Molecular Input Line Entry System) representation of compounds and approximates their binding affinity. A docking surrogate is an intentionally simplified deep learning model that reduces the complexity of otherwise intractable calculations to thereby speed up computation.

“When you want to screen out and push the envelope really far to screen billions of compounds, the approximations enabled by docking surrogates become crucial as every bit of time spent on each calculation becomes really significant,” Vasan said.

To evaluate the performance and accuracy of their workflow, the team conducted experiments using molecular docking binding affinity data on multiple receptors, comparing SST with another state-of-the-art docking surrogate.

“At first we deployed the workflow using 48 nodes on Polaris, which enabled us to screen some three billion compounds per hour,” Vasan explained. “Running on Aurora, our initial use of 128 nodes allowed us to screen 11 billion compounds in an hour, demonstrating significant performance improvements node for node. When we scaled up to 256 nodes we achieved the 22 billion compounds per hour.”

Assuming the linear scaling holds, the researchers could expect to screen up to a trillion compounds per hour if using all of Aurora’s compute resources, he said.

SST showed comparable accuracy to state-of-the-art surrogate models, with r-squared values (the proportion of variation in dependent variables predictable from independent variables) between 70 and 90 percent on multiple test protein receptors, affirming the capability of SST to learn molecular information directly from language-based data.

Developing for Aurora

The research grew out of an Aurora Early Science Program project centered on targeted drugs.

“There was some work done through that project a few years ago that demonstrated the ability to predict the binding affinity of a drug to a given COVID target,” Vasan explained. “The results themselves were encouraging, but of particular interest to me was the model used. It was very simple, but it was also relatively slow when processing new compounds and depended on the absence of computational bottlenecks.”

Vasan worked to convert the Transformer model into an architecture capable of predicting binding affinity from inputted chemical text representations.

“The goal was to predict binding affinity without needing to do this expensive computational descriptor calculation step,” he stated.

Vasan optimized the tokenization (the process in which the chemical text representation becomes a digital variable) and thereby increased the accuracy, preprocessing speed, and inference speed for the model on the new Transformer architecture.

“The notably faster tokenization preprocessing is one of the significant advantages of the SST approach over alternative methods,” Vasan said. “We ended up with a tenfold acceleration when both preprocessing and inference speed are considered.”

The goal of screening through large chemical databases to identify potential drugs to target proteins motivated Vasan to port the code over to Aurora, key to which was collaboration with Intel engineers.

“We took the architecture and developed it a bit further. We added an MPI element to support processing across multiple nodes of Aurora, while also working to increase performance on a single node—then we just had to parallelize that down to hundreds of nodes on Aurora.”

A key future direction for the workflow involves integrating de-novo drug design, enabling the researchers to scale their efforts to explore the limits of synthesizable compounds within chemical space.