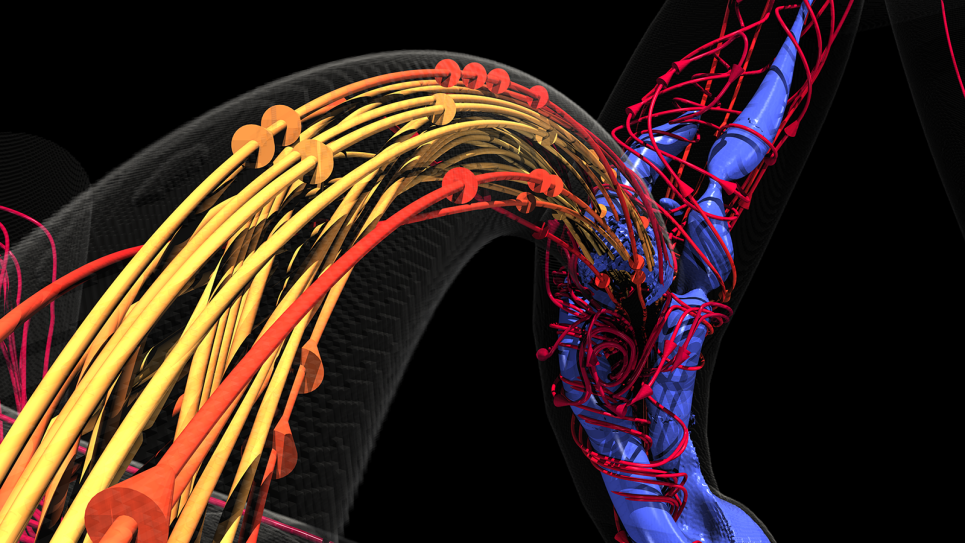

Amanda Randles, an assistant professor at Duke University, is using modeling and simulation to find answers. She and her team have developed HARVEY, a model that simulates blood flow throughout the body, and now they want to use it to predict the movement of cancer cells at a microscopic level.

Simulating such a complex process will require a huge amount of computing power, even more than what high-performance computing (HPC) systems today can deliver. But with the launch of Aurora, one of the nation’s first exascale systems, Randles’ team can gain critical insights.

Randles is one of a select few researchers chosen to take part in the ALCF’s Aurora Early Science Program (ESP). Her project will be among the first to run on Aurora, which will be delivered to Argonne in 2022.

“Tackling our new research into the process of metastasis and performing the intricate simulations needed means we need even greater computing power to handle the massive data sets in real time. The Aurora system will help us meet this need,” said Randles.

Once operational, the Aurora system will be capable of performing an astounding billion-billion calculations per second. Randles’ team will leverage this capability to create high-resolution and more accurate representations of the geometry of the circulatory system. It will also allow them to simulate the flow of fluid through the system, as well as collections of red blood cells and tumor cells moving in the fluid flow.

Enabling scientists to analyze data on-the-fly

To ensure they can hit the ground running once Aurora is online, Randles’ team is working with Argonne scientists to prepare their application to run efficiently on the new system. One challenge they must address in the process is the disparity between computation speed and the speed at which data can be saved.

As with other HPC systems, Aurora will enable computation to happen at lightning fast speeds, but the rate at which data can be stored longer term (by being written to disk) can’t match this speed, a well-known gap that has only grown over the years.

Left unaddressed, researchers would have to slow down their computation by orders of magnitude to write all their simulation data to disk. But this approach would take lots of time and require huge amounts of storage. For Randles’ team and many others, this is unfeasible given the sheer volume of the data and workload.

As an alternative, the HPC community is pursuing a huge effort to enable data analysis to happen in situ (meaning in the computer memory) as simulations are running. Visualizing and analyzing data in this way enables computation to still happen quickly, while giving researchers the chance to decide what data should be stored long term versus thrown away.

“If, for example, you identify a particular area of interest within the whole system, you might choose to save data at a higher frequency or higher level of detail just in that small area. That would still enable you to get more science out of the data while reducing the amount of data that you actually have to write to disk,” said Joseph Insley, ALCF visualization and data analysis team lead.

Through a DOE project called SENSEI, Insley and ALCF computer scientist Silvio Rizzi are working with other researchers across the DOE laboratory complex and industry to create a unified interface through which scientists can access a library of frameworks that support in situ data analysis and visualization. With access to early hardware and the Aurora software development kit, the ESP team has been testing and developing these capabilities in advance of the exascale machine’s arrival.

“We’ve been using the ALCF’s Theta supercomputer and early Aurora hardware to integrate this library with the HARVEY code. By enabling visualization and analysis on the data while it’s still in memory, the science team will be able to gain more insights from the data than they otherwise would,” said Insley.

Ultimately, the team’s research on Aurora aims to inform the development of new cancer drugs and treatments that have the potential to save lives in years to come.

“By understanding the biological mechanisms behind metastasized cancer cells, too, we hope our work with HARVEY will eventually help doctors and their patients in the fight against cancer,” Randles concluded.

Randles’ ESP project, “Extreme-Scale In-Situ Visualization and Analysis of Fluid-Structure-Interaction Simulations,” is supported by DOE’s Advanced Scientific Computing Research program.

===========

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.