As the use and impact of high-performance computing (HPC) systems have penetrated an ever-wider range of scientific disciplines, the quantities of data generated by researchers have more than kept pace with the accelerating processor speeds driving computing-based research. In particular, advances in feeds from scientific instrumentation such as beamlines, colliders, and space telescopes—among many technologies—have increased data output substantially. Users are producing more data and have more capabilities for using these data. Yet the sheer size and scale of the data can make the seemingly simple task of sharing one’s work a daunting problem.

To deal with the logistics of handling vast quantities of data and to support their rapid, reliable and secure sharing, researchers at the U.S. Department of Energy’s (DOE) Argonne National Laboratory collaborated with Globus to develop and deploy the Petrel data service at the Argonne Leadership Computing Facility (ALCF). Petrel is currently being used for several initiatives at the Advanced Photon Source (APS). The ALCF and APS are DOE Office of Science User Facilities located at Argonne.

“The partnering of unique scientific resources like the ALCF and the APS is changing the landscape of high-performance computing,” said Michael Papka, director of the ALCF. “While the scale of data these facilities can generate is a driver of new methods and techniques for obtaining results, it also necessitates the development of adaptive new infrastructure for storage and distribution to make that increased scale tractable. These trends will continue to develop as we enter the exascale era, with the forthcoming arrival of our next-generation systems Polaris and Aurora set to further amplify the role of data-driven discovery.”

In addition, the arrival of Eagle, the ALCF’s new global filesystem, will bring still larger and more capable production-level file sharing to facility users.

While the ALCF has always had capacious storage systems, access was historically limited by facility account and credential requirements. Petrel was designed to facilitate straightforward sharing with outside collaborators.

“There was growing demand for a way to distribute data more widely so as to easily engage multiple institutions,” said Ian Foster, director of Argonne’s Data Science and Learning (DSL) division. “The remedy was to allocate a more or less broadly accessible storage system and, crucially, to make it manageable via Globus protocols, which are mechanisms for controlling the flow of data, as well as who can see it.”

Users can grant access to their collaborators by means of various application programming interfaces (APIs), making temporary facility accounts or credentials unnecessary. The interactivity permitted by the APIs distinguishes Petrel from the ALCF’s previous storage systems and presents users with potentially endless possibilities for data control and distribution. Direct connections to high-speed external networks permit data access at many gigabytes per second.

By breaking down barriers to large-scale data sharing, Petrel is helping to advance science at data-intensive facilities like the APS.

“Petrel has been critical in integrating research conducted at APS beamlines with the HPC resources of the ALCF, and in disseminating scientific results to the APS user community in a timely fashion,” said Nicholas Schwarz, principal computer scientist and leader of the X-Ray Science Division (XSD) Scientific Software Engineering and Data Management Group at the APS. “Whether being employed to develop new materials, probe the structure of protein molecules, or explore the structure of matter, data collected at the APS convey an extraordinary amount of information — so much, in fact, that not only do its collation and analysis require powerful computational systems, but its delivery for those purposes can prove extremely challenging.”

The following examples illustrate how a few ongoing projects are leveraging Petrel to manage and share massive scientific datasets.

Brain maps

A connectome — a map of all the neurons in a brain and the connections between them — is very large, even if the brain being mapped is very small: a connectome detailing just a cubic centimeter of brain matter runs into the petabytes.

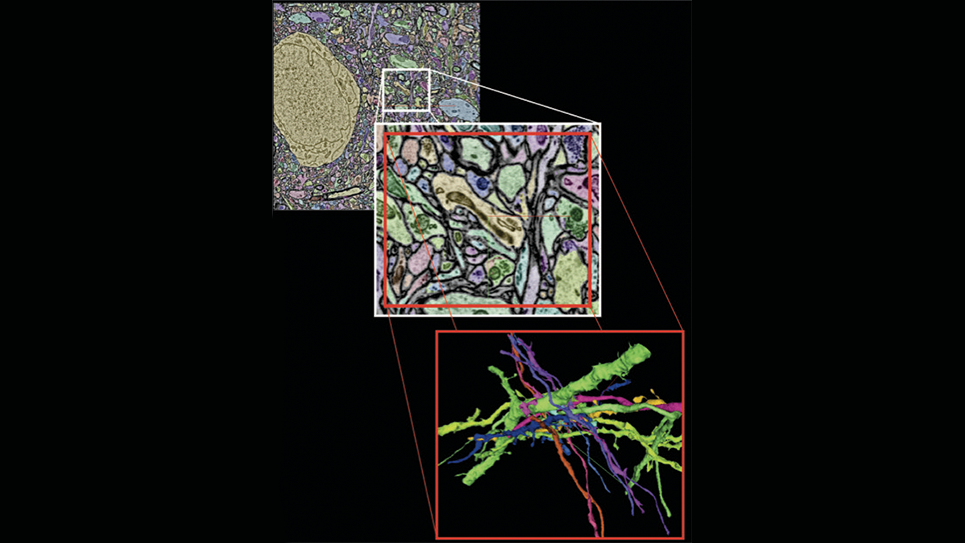

One project led by Argonne and University of Chicago researchers is on a trajectory toward creating a connectome of a mouse brain. Many geographically disparate teams — neuroscientists, biologists, computer scientists and data scientists — are involved in this complex work. With APS beam time and electron microscopes, researchers generate neurocartographic datasets using nanometer-resolution serial-section electron microscopy and micron-resolution synchrotron-based X-ray microscopy experiments to capture images of every cell. The data must be reconstructed via a meticulous analysis process, in concert with biologists proofreading the initial reconstructions to achieve a final result, which is then made available to researchers for further study.

As the project already occupies petabytes and will eventually grow to exabytes of storage, the distribution itself requires careful effort. A special pipeline and repository were built to enable dynamic interactive exploration, granting researchers around the world the ability to utilize the reconstructed data to advance our understanding of neuroanatomy and to probe neurological conditions such as Alzheimer’s and autism.

Materials science

Similar to the neurocartographic repository is a data trove of interest to chemists and materials scientists. Researchers at the APS feed data from X-ray photon correlation spectroscopy (XPCS) experiments to ALCF web portals that dynamically update with each new sample. The data are displayed visually as interactive 2D and 3D models buttressed with crystal maps, histograms and material correlation graphs.

Also significant for materials science is the Materials Data Facility (MDF). Enormous in size and scope, the MDF comprises and streamlines hundreds of terabytes of materials science data from numerous sources. It automates data ingestion and indexing, and simplifies the publication process irrespective of data size. This creates a platform that enables unified search and retrieval across millions of disparate records, as well as providing APIs for data creation and consumption. The user can obtain unique identifiers for their data, and control who can access them.

Machine learning methods are easily accessible and executed in the MDF. An associated Kubernetes-based computational cluster, PetrelKube, permits analyses to be performed on datasets stored in the Petrel system. As a result, the MDF emerges as a powerful instrument for enabling data-driven discoveries in chemistry and materials science.

“The MDF was designed to meet a variety of emerging data challenges,” said Ben Blaiszik, a researcher at Argonne and the University of Chicago. “Funding agencies have increasingly stricter requirements for data management, there is a growing emphasis in scientific research on replicability and provenance, and data are growing in size and heterogeneity. The hope is that the MDF, by featuring services for both data publication and discovery, will promote greater data sharing and reuse, as well as improved self-curation, while also empowering users to identify powerful new tools and approaches to apply in their own work.”

COVID-19

During the coronavirus pandemic, Petrel has been instrumental in accelerating data-intensive COVID-19 research that leverages ALCF resources.

Intended to elucidate the nature of the virus and to identify potential therapeutics, researchers are using supercomputers to plumb a massive pool of literally billions of small molecules and execute calculations to estimate their potential properties. Associated descriptors are used to train machine learning models for the identification of molecules that could dock well with viral proteins; candidates are subsequently modeled in molecular dynamics simulations, and the best-performing among them are selected for laboratory synthesis.

“We’ve got hundreds of terabytes of data that have been created by different members of this collaboration, and we’re using Petrel to organize all of those data,” Foster said. “In tandem with a relational database we’ve constructed, Petrel has become the place where we collect, share and access all of our computed results, so it’s become a vital component of this important work.”

What comes next

Because user requirements continue to change, the ALCF continues to evolve. The arrival of the data service Eagle has brought larger and more capable production-level file sharing to the ALCF. Compared to Petrel, Eagle enables even broader distribution of reassembled data acquired from facilities, such as the APS. Thanks to the service, a wider community will able to access uploaded data, and ALCF users will be able to directly access those data for analysis. Non-ALCF users throughout the scientific community will have the ability to write data to and read data from Globus endpoint shares on the Eagle filesystem. Eagle is also designed to foster experimentation, allowing analysts to write new algorithms so as to attempt analyses that have never been performed.

Eagle’s fully supported production environment is the next step in the expansion of edge services that blur the boundaries between experimental laboratories and computing facilities. The use and prominence of such services at the ALCF is only expected to increase as they become more integral to the facility’s ability to deliver data-driven scientific discoveries.

==========

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

About the Advanced Photon Source

The U. S. Department of Energy Office of Science’s Advanced Photon Source (APS) at Argonne National Laboratory is one of the world’s most productive X-ray light source facilities. The APS provides high-brightness X-ray beams to a diverse community of researchers in materials science, chemistry, condensed matter physics, the life and environmental sciences, and applied research. These X-rays are ideally suited for explorations of materials and biological structures; elemental distribution; chemical, magnetic, electronic states; and a wide range of technologically important engineering systems from batteries to fuel injector sprays, all of which are the foundations of our nation’s economic, technological, and physical well-being. Each year, more than 5,000 researchers use the APS to produce over 2,000 publications detailing impactful discoveries, and solve more vital biological protein structures than users of any other X-ray light source research facility. APS scientists and engineers innovate technology that is at the heart of advancing accelerator and light-source operations. This includes the insertion devices that produce extreme-brightness X-rays prized by researchers, lenses that focus the X-rays down to a few nanometers, instrumentation that maximizes the way the X-rays interact with samples being studied, and software that gathers and manages the massive quantity of data resulting from discovery research at the APS.

This research used resources of the Advanced Photon Source, a U.S. DOE Office of Science User Facility operated for the DOE Office of Science by Argonne National Laboratory under Contract No. DE-AC02-06CH11357.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.