Aurora is being installed in a new wing of the ALCF's data center. (Image: Argonne National Laboratory)

Argonne National Laboratory has made some significant facility upgrades, including utility-scale electrical and mechanical work, to get ready for its next-generation supercomputer.

When it comes to new supercomputers, a machine’s computational horsepower often gets the most attention. And while the novel computing hardware that gives supercomputers their processing power is indeed an engineering marvel, so too is the infrastructure required to operate the massive, world-class systems.

At the U.S. Department of Energy’s (DOE) Argonne National Laboratory, work has been underway for the past several years to expand and upgrade the data center at the Argonne Leadership Computing Facility (ALCF) that will house the upcoming Aurora exascale supercomputer. The ALCF is a DOE Office of Science user facility.

“Preparing for a new supercomputer requires years of planning, coordination, and collaboration,” said Susan Coghlan, ALCF Project Director for Aurora. “Aurora is our largest and most powerful supercomputer yet so we’ve had to do some substantial facility upgrades to get ready for the system. We’ve transformed our building with the addition of new data center space, mechanical rooms, and equipment that significantly increased our power and cooling capacity.”

Built by Intel and Hewlett Packard Enterprise (HPE), Aurora will be theoretically capable of delivering more than two exaflops of computing power, or more than 2 billion billion calculations per second, when it’s powered on. The new supercomputer will follow the ALCF’s previous and current systems – Intrepid, Mira, Theta, and Polaris – to deliver on the facility’s mission to provide leading-edge supercomputing resources that enable breakthroughs in science and engineering. Open to researchers from across the world, ALCF supercomputers are used to tackle a wide range of scientific problems including designing more efficient airplanes, investigating the mysteries of the cosmos, modeling the impacts of climate change, and accelerating the discovery of new materials.

“With Aurora, we’re building a machine that will be able to do artificial intelligence, big data analysis, and high-fidelity simulations at a scale we haven’t seen before,” said ALCF Director Michael Papka. “It is going to be a game-changing tool for the scientific community.”

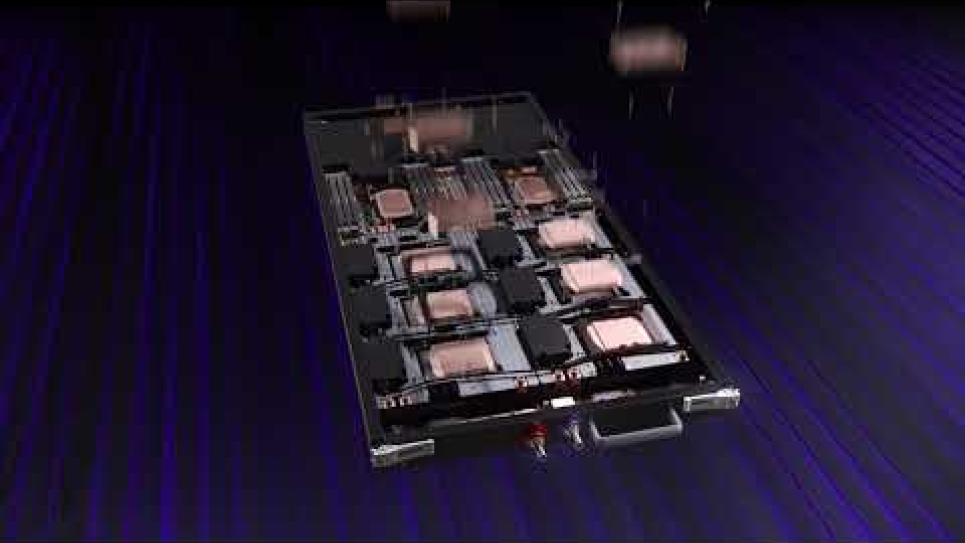

Over the past year, the physical Aurora system has begun to take shape with the delivery and installation of its computer racks and several components, including a test and development platform named Sunspot, the HPE Slingshot interconnect technology, and the Intel DAOS (Distributed Asynchronous Object Storage) storage system. Occupying the space of two professional basketball courts, Aurora is made up of rows of supercomputer cabinets that stand over 8-feet tall. The cabinets are outfitted with more than 300 miles of networking cables, countless red and blue hoses that pipe water in and out to cool the system, and specialized piping and equipment that bring the water in from beneath the data center floor and the electrical power from the floor above.

The installation continues this fall with the phased delivery of Intel’s state-of-the-art Ponte Vecchio GPUs (graphics processing units) and Sapphire Rapids CPUs (central processing units). The system is slated to be completed next year with an upgrade to Sapphire Rapids CPUs with high-bandwidth memory.

While the supercomputer is nearing completion, the work to ready the Argonne site for Aurora has been years in the making. As Coghlan noted, the process of deploying a new supercomputer begins with the major facility upgrades necessary to operate the system, including utility-scale electrical and mechanical work.

Because Aurora is a liquid-cooled system, Argonne had to upgrade its cooling capacity to pump 44,000 gallons of water through a complex loop of pipes that connects to cooling towers, chillers, heat exchangers, a filtration system, and other components. With pipes ranging from 4 inches to 30 inches in diameter, the cooling system ensures the water is at the perfect temperature, pressure, and purity levels as it passes through the Aurora hardware.

The electrical room, which is located on the second floor above the data center, contains 14 substations that provide 60 megawatts of capacity to power Aurora, future Argonne computing systems, and the building’s day-to-day electricity needs. The room is outfitted with a large ceiling hatch so the substations can be lowered in (and out if needed) by construction cranes.

“When we’re doing utility-scale work to upgrade power and cooling capacity, we aim to create an infrastructure that can support future systems as well,” Coghlan said. “For example, Polaris, Theta, and our AI Testbed systems are all currently running off of the power and cooling capacity that was put in place for our now-retired Mira supercomputer over a decade ago.”

Once the major facility upgrades were in place, the team moved on to data center enhancements. Focused on the machine room, this work included making sure power is delivered to the right locations at the right voltage, installing heavy-duty floor tiles to support the 600-ton supercomputer, and putting in pipes to link the water loop to Aurora.

While there are always challenges associated with construction work at this scale, many Aurora facility upgrades were carried out during the COVID-19 pandemic, creating some unforeseen issues related to contractor access and supply chain disruptions.

“Preparing for a new supercomputer during the pandemic definitely caused some unexpected hurdles,” Coghlan said. “But Argonne and our partners put protocols in place to ensure we could continue to work safely and mitigate the impacts of COVID as much as possible.”

Due to supply chain constraints causing various parts to be delayed, the Aurora team has been building the supercomputer piece by piece as components have become available.

“We’ve basically assembled and built the entire system on the data center floor,” Coghlan said. “Instead of waiting for every part to be ready at once, we decided to take whatever parts and pieces we could get. We’ve had to be agile, adapting and modifying the plan on the fly to look at what we can build and what we have to push off to a later date.”

Having a majority of the physical system and supporting infrastructure in place has allowed the Argonne-Intel-HPE team to test and fine-tune various components, such as DAOS and the cooling loop, ahead of the supercomputer’s deployment.

“It’s been amazing to see our building go from a construction zone to a new state-of-the-art data center,” Papka said. “We can’t wait to power on Aurora, open it up to the research community, and work with them to take their science to new levels.”

==========

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science