ALCF supercomputing and AI resources enable research breakthroughs in 2024

As 2024 draws to a close, we take a look back at some of the innovative research campaigns carried out by the ALCF user community.

Over the past year, researchers from across the world used supercomputing and AI resources at the Argonne Leadership Computing Facility (ALCF) to drive breakthroughs in fields ranging from protein design and materials discovery to cosmology and fusion energy science. The ALCF is a U.S. Department of Energy Office of Science user facility at Argonne National Laboratory.

At the same time, the ALCF continued its efforts to deliver state-of-the-art high-performance computing (HPC) and AI resources to the scientific community. Notably, Argonne’s Aurora supercomputer, which will be deployed for science early next year, officially surpassed the exascale threshold and earned the top ranking in a measure of AI performance. Meanwhile, the ALCF AI Testbed expanded its infrastructure and reach, adding new systems and supporting the first round of projects allocated through the National Artificial Intelligence Research Resource Pilot.

Highlighting the impact of supercomputing-driven research, David Baker, a longtime ALCF user from the University of Washington, was awarded the 2024 Nobel Prize in Chemistry for his pioneering work in computational protein design.

The ALCF team also continued to make progress in developing capabilities for an Integrated Research Infrastructure that connects DOE’s powerful supercomputers with large-scale experiments to streamline data-intensive science.

To broaden engagement with academic institutions, the ALCF launched the Lighthouse Initiative, a new program aimed at building enduring partnerships with U.S. universities. As part of the initial pilot phase, the ALCF has established partnerships with the University of Chicago, the University of Illinois Chicago, and the University of Wyoming.

At the heart of all the ALCF’s work is the mission to enable breakthroughs in science and engineering. As 2024 draws to a close, we take a look back at some of the innovative research campaigns carried out by the ALCF user community this year. For more examples of the groundbreaking science supported by ALCF computing resources, explore the ALCF’s 2024 Science Report.

AI-driven protein design

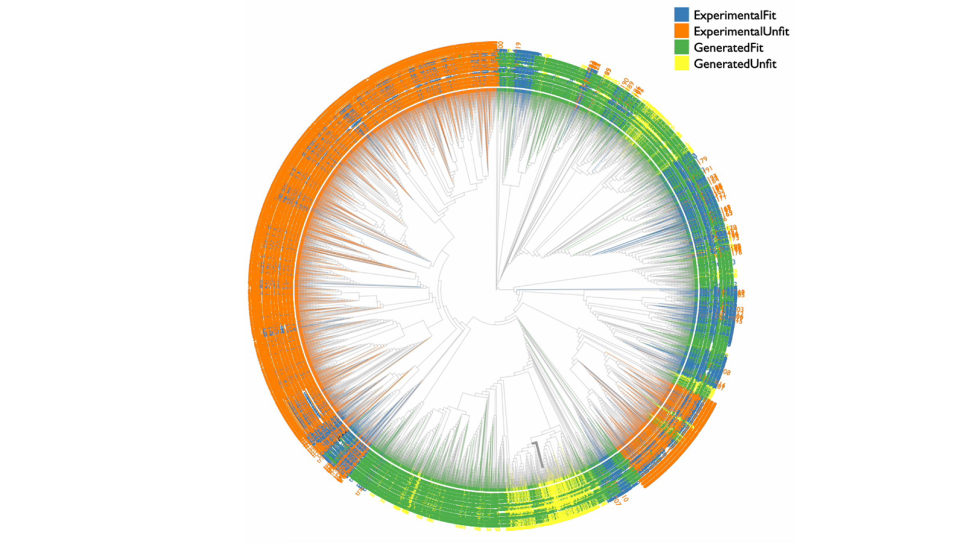

Phylogenetic tree visualization of yeast sequences. The tree shows four categories of HIS7 family of yeast sequences: experimental fit, experimental unfit, generated fit, and generated unfit. Image: https://doi.ieeecomputersociety.org/10.1109/SC41406.2024.00013

Argonne researchers leveraged Aurora and four other powerful supercomputers to develop an innovative framework to accelerate the design of new proteins. A finalist for the prestigious Gordon Bell Prize, the team’s MProt-DPO framework was designed to integrate different types of data streams, or “multimodal data.” It combines traditional protein sequence data with experimental results, molecular simulations, and even text-based narratives that provide detailed insights into each protein’s properties. This multimodal approach has the potential to speed up protein discovery for medicines, catalysts, and other applications. MProt-DPO is also helping to advance Argonne’s broader AI for science and autonomous discovery initiatives. The tool’s use of multimodal data is central to the ongoing efforts to develop AuroraGPT, a foundation model designed to aid in autonomous scientific exploration across disciplines.

Simulating the cosmos to prepare for state-of-the-art telescopes

This synthetic image is a slice of a much larger simulation depicting the cosmos as NASA's Nancy Grace Roman Space Telescope will see it when it launches by May 2027. Every blob and speck of light represents a distant galaxy (except for the urchin-like spiky dots, which represent foreground stars in our Milky Way galaxy). (Image by C. Hirata and K. Cao (Ohio State University) and NASA’s Goddard Space Flight Center)

To prepare for future observations from two state-of-the-art telescopes, researchers from DOE and NASA joined forces to use the ALCF's Theta supercomputer to create nearly 4 million simulated images that depict the cosmos as NASA’s Nancy Grace Roman Space Telescope and the NSF-DOE Rubin Observatory will see it. The simulations—the first of their kind to factor in the instrument performance of the telescopes—will help researchers understand signatures that each instrument imprints on the images and iron out data processing methods now so they can decipher future data correctly. Ultimately, the team’s work will enable scientists to comb through the observatories’ future data in search of tiny features that will help them unravel the biggest mysteries in cosmology.

Leveraging AI to enhance weather prediction

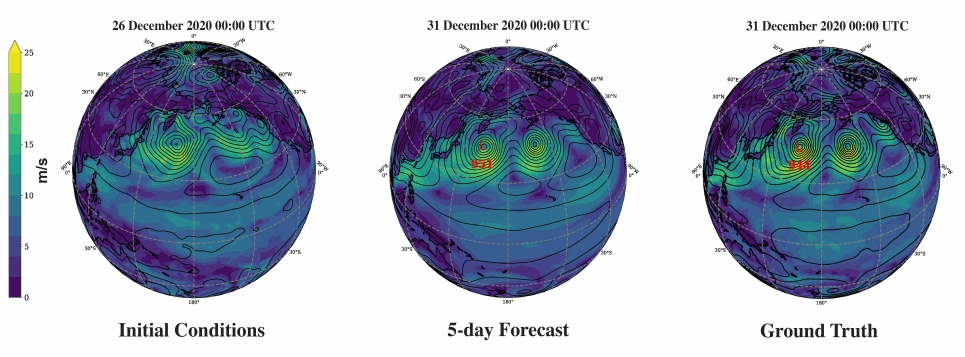

Illustration of a six-day forecast of 10-meter wind speed (color-fill) and mean sea level pressure (contours) using a high-resolution version of Stormer (HR-Stormer) run at 30-kilometer horizontal resolution. (Image: Troy Arcomano, Argonne National Laboratory)

Using the ALCF’s Polaris supercomputer, a team of researchers from Argonne and the University of California, Los Angeles, developed Stormer, a scalable transformer model for weather forecasting. Stormer’s ability to maintain accuracy over extended periods marks a substantial improvement in medium-range weather prediction capabilities, providing a powerful new tool for generating more reliable predictions to address issues related to extreme weather events. In addition, the efficiency of Stormer’s training process can inform the development of future weather forecasting models to make high-precision predictions more accessible and less resource-intensive.

Demonstrating the theoretical power of quantum computing

Quantum algorithm calculations were performed on the Polaris supercomputer at the Argonne Leadership Computing Facility. (Image by Argonne National Laboratory.)

Researchers from JPMorgan Chase, Argonne, and Quantinuum used the ALCF’s Polaris supercomputer to demonstrate clear evidence of a quantum algorithmic speedup for the quantum approximate optimization algorithm. The results show how high-performance computing can complement and advance quantum information science, and represent an important step toward unlocking the potential of quantum computing.

Toward the design of fusion power plants

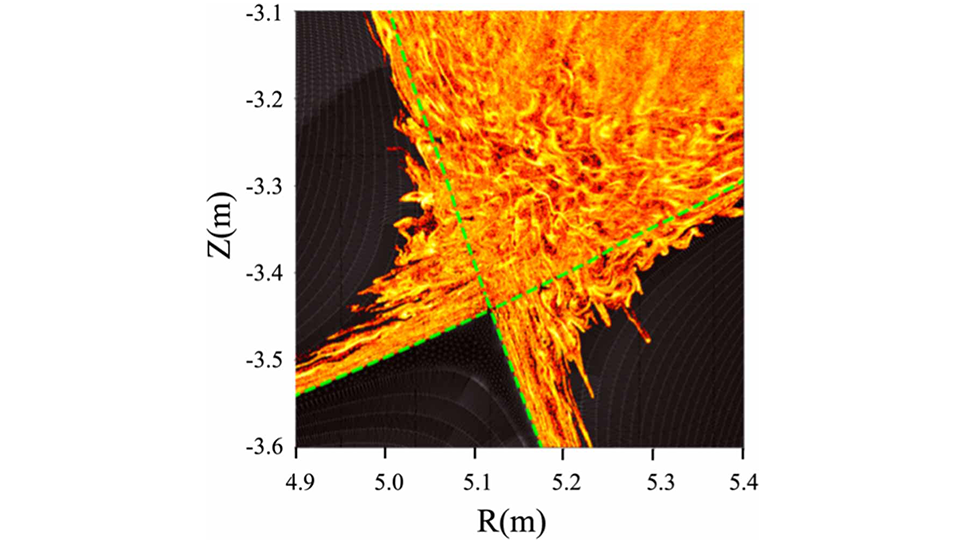

XGC prediction of the turbulent homoclinic tangles in the 15 MA ITER edge in the stationary operation phase without edge localized mode activity or magnetic perturbation coils. Shown here is a snapshot Poincare puncture plot of turbulent magnetic field lines that started poloidally uniformly from the pedestal region. Image: https://doi.org/10.1088/1741-4326/ad3b1e

A research team led by DOE’s Princeton Plasma Physics Laboratory leveraged ALCF supercomputers to advance our understanding of fundamental edge plasma physics in fusion reactors, answering questions critical to the successful operation of ITER and to the design of fusion power plants. First-principles simulations carried out on Polaris and Aurora are providing insights into issues with power exhaust, including mitigating stationary heat-flux densities and avoiding unacceptably high transient power flow to material walls.

Mapping out the possibilities of new processors and accelerators

The ALCF AI Testbed's Cerebras system. Image: Argonne National Laboratory

Argonne researchers examined the feasibility of performing continuous energy Monte Carlo (MC) particle transport on the ALCF AI Testbed’s Cerebras Wafer-Scale Engine 2 (WSE-2). The researchers ported a key kernel from the MC transport algorithm to the Cerebras Software Language programming model and evaluated the performance of the kernel on the WSE-2, which performed up to 130 times faster than traditional processors. The team’s analysis suggests the potential for a wide variety of complex and irregular simulation methods to be mapped efficiently onto AI accelerators like the WSE-2. MC simulations themselves offer the potential to fill in crucial gaps in experimental and operational nuclear reactor data.

Accelerating drug discovery with machine learning

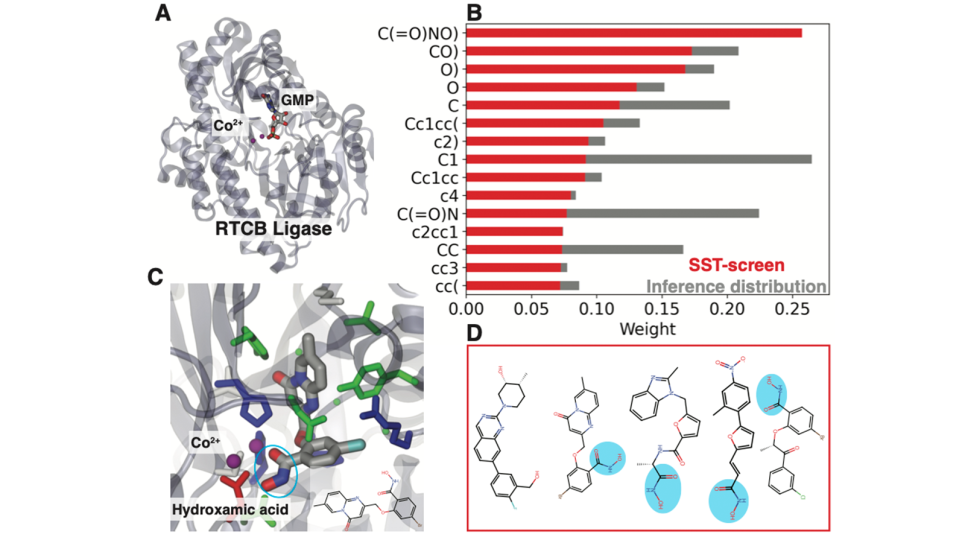

Caption: Results of a screening run performed on Aurora to target the oncoprotein RTCB ligase, illustrating SST’s ability to identify compounds with key chemical motifs for binding. The compounds identified share a hydroxamic acid group common to chelate metal ions such as that in the active site of RTCB. Image: Archit Vasan, Argonne National Laboratory

Argonne researchers used the Aurora supercomputer and advanced machine learning techniques to overcome computational bottlenecks that arise when assessing the molecular properties of potential drug compounds on a large scale. High-throughput screening of extensive compound databases represents a promising direction for the discovery of treatments for cancer and infectious diseases. With Aurora, the researchers have been able to successfully screen as many as 22 billion drug molecules per hour.

Enhancing AI models for materials discovery

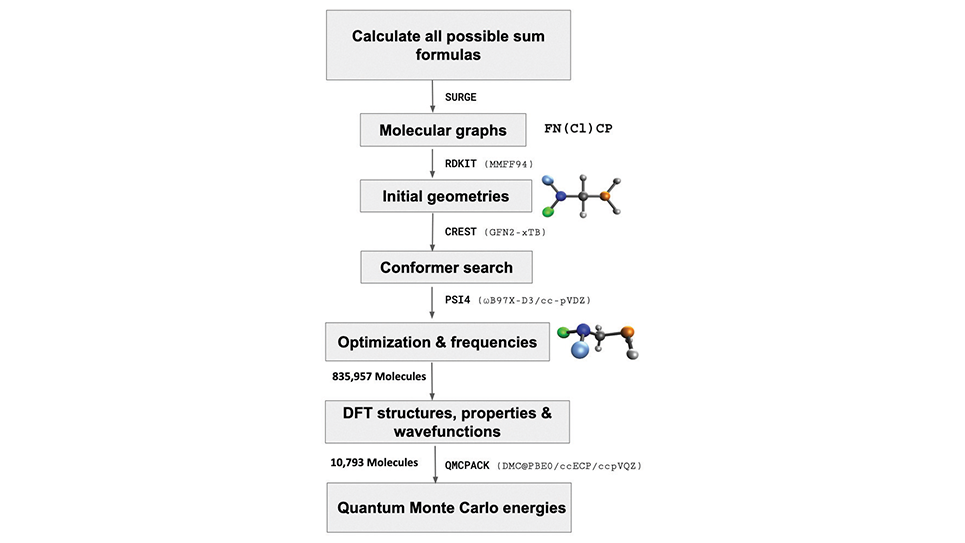

Workflow used to generate the VQM24 dataset. Image: https://arxiv.org/pdf/2405.05961

Navigating the vast chemical compound space (CCS) to identify molecules with desirable properties is critical to the design and discovery of new materials for batteries, catalysts, and other important applications. Machine learning and AI techniques have emerged as powerful tools for accelerating explorations of CCS. Using ALCF supercomputers, a multi-institutional research team generated a comprehensive quantum mechanical dataset, VQM24, to enhance the training and testing of machine learning models for materials discovery. By providing a more complete and accurate representation of the chemical compound space, VQM24 can help researchers improve the predictive power of scalable and generative AI models of real quantum systems.

Near-real-time cross-facility data processing

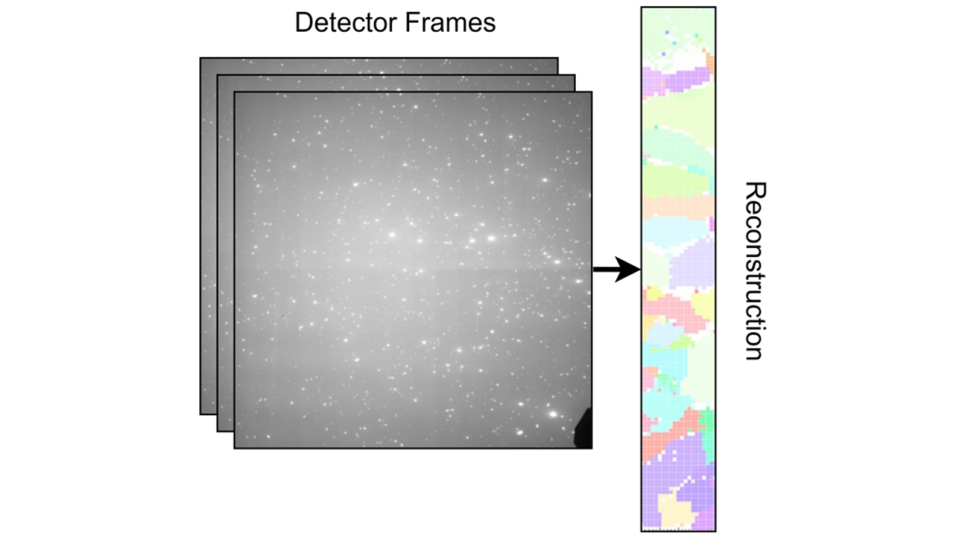

An example of an aluminum dataset and the corresponding reconstruction. This dataset contains 20 points and a total of 8,020 frames. Each point is processed into a vertical slice of the 2D reconstruction displayed on the right. The reconstruction segment has a 1 micron resolution and the colors display the orientation of the crystalline grains within the sample. Image: https://doi.org/10.1145/3624062.3624613

To demonstrate the capabilities of a data pipeline connecting the ALCF and the Advanced Photon Source, Argonne researchers carried out a study focused on Laue microdiffraction, a technique used to analyze materials with crystalline structures. The team's fully automated pipeline leveraged the ALCF’s Polaris supercomputer to rapidly reconstruct data obtained from an experiment, returning reconstructed scans to scientists within 15 minutes of them being sent to the ALCF. Their work to enable experiment-time data analysis is helping pave the way for a broader Integrated Research Infrastructure that seamlessly links supercomputers with large-scale experiments. The APS is a DOE Office of Science user facility.

ALCF teams receive Best Paper awards at SC24 workshops

ALCF's Victor Mateevitsi (center) receives the Best Paper award at the In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization Workshop at SC24.

Two ALCF teams received Best Paper awards at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC24), held in November in Atlanta. The teams were recognized for their work to advance in-situ visualization capabilities and to provide performance benchmarking data on the Intel GPUs that power the ALCF’s Aurora supercomputer. The in-situ visualization paper introduces a framework for adding interactive, human-in-the-loop steering controls to existing simulation codes. This capability allows scientists to pause, adjust, and resume large-scale simulations without starting over. The Intel GPU evaluation provides micro-benchmarking data from applications running on two supercomputers powered by the Intel GPUs—ALCF's Aurora exascale system and University of Cambridge's Dawn system—to guide developers in their optimization efforts.