ALCF staff gather in front of Mira prior to the supercomputer's retirement. (Image: Argonne National Laboratory)

Mira, the ALCF's 10-petaflop IBM Blue Gene/Q system, will be retired on December 31, 2019, ending a seven-plus year run of enabling breakthroughs in science and engineering.

When a treasured and respected colleague hits retirement age, coworkers can grow a bit sentimental about the achievements and highlights accomplished over a dedicated and industrious career. It turns out that it is equally true for supercomputers that reach the end of their lifetimes.

Mira, the 10-petaflop IBM Blue Gene/Q supercomputer first booted up at the U.S. Department of Energy’s (DOE) Argonne National Laboratory in 2012, will be decommissioned at the end of this year. Its work has spanned seven-plus years and delivered 39.6 billion core-hours to more than 800 projects, solving nearly intractable problems in scientific fields ranging from pharmacology to astrophysics.

“Mira will certainly be missed,” said Michael Papka, director of the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science of User Facility that houses Mira. “Serving as our workhorse supercomputer for many years, Mira is beloved by the ALCF user community and our staff for its ability to tackle big science problems as well as its superior reliability.”

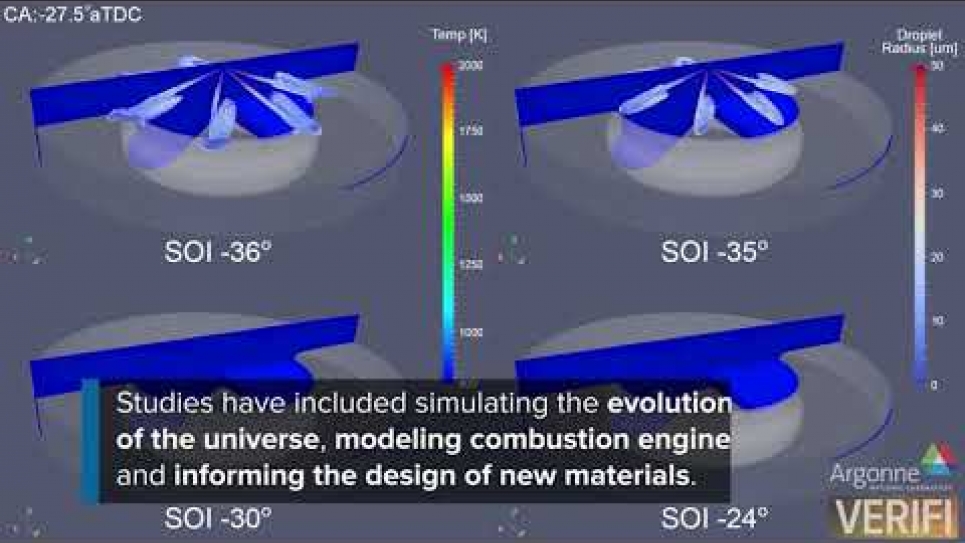

The leadership-class supercomputer is Argonne’s third and final system in the IBM Blue Gene architectural line, which began with the deployment of the lab’s 5.7-teraflop Blue Gene/L machine in 2005. That was followed by Intrepid, a 557-teraflop IBM Blue Gene/P system that served the scientific computing community from 2008 to 2013. When Mira came online in 2012, it was 20 times more powerful than Intrepid, giving researchers a tool that made it possible to perform simulations on unprecedented scales and create more accurate models of everything from combustion engines to blood flow.

Mira remains among the most powerful systems available for open science, sitting at number 22 on the most recent TOP500 list of the world’s fastest supercomputers. When it first launched, Mira was ranked as the third fastest system and topped the Green500 list, which recognizes the world’s most energy-efficient supercomputers. It also remains the third ranked system on the Graph 500 list, a measure focused on a supercomputer's ability to handle data-intensive applications.

One of the necessary advances that made Mira so effective and energy efficient involved directly cooling the machine with pipes carrying water instead of blowing air over the chips. “Water cooling gives you the opportunity to take away a lot more heat from the chips more quickly than air cooling,” said Susan Coghlan, ALCF project director who led the development and deployment of Mira at Argonne.

To enable Mira to sink its teeth into the most challenging problems possible, its designers needed to rethink what a supercomputer should look like. Previous supercomputers were built with progressively more powerful processors, but eventually engineers hit a limit on how many transistors they could fit on an individual core. The answer came in the form of the IBM Blue Gene architecture that eventually resulted in Mira and fit sixteen cores on a single node.

“Mira was the pinnacle of the Blue Gene many-core architectural line, providing a combination of power and reliability that was unprecedented for its time,” Coghlan said.

Each of Mira’s nearly 50,000 nodes functions like a nerve cell, relaying information at the speed of light, through fiber optic cables, to other parts of the machine. Getting these connections organized in the optimal configuration to reduce the time it takes for different parts of the computer to exchange information represents a fundamental challenge of designing a supercomputer.

“A big part of what makes Mira so remarkably effective at solving these complex science challenges is how efficiently the machine is able to communicate across its nodes,” said Kalyan (Kumar) Kumaran, director of technology at the ALCF. “Even when different simulations were running simultaneously on different parts of the system, Mira was able to eliminate communication interference by isolating the traffic for each job.”

Mira’s fiber optic network geometry is called the interconnect, routing like an interstate highway system the signals coming from each node. The complexity of the interconnect results from the introduction of extra dimensions that shrink the total space that signals need to cover. Previous versions of the Blue Gene architecture had simpler interconnects, but Mira’s is a defining accomplishment. According to Katherine Riley, director of science at the ALCF, no technology currently exists today that would replace Mira’s interconnect and be competitive with it.

The unique topology of Mira’s interconnect will make it, in some sense, hard to replace by future systems that offer a larger amount of pure computational brawn. “After seven years, most of the time people are completely ready to move on to the next platform, because it will be so much more effective at doing the kinds of problems scientists want to solve,” Riley said. “But frankly, that’s not the case with Mira – it is an incredibly powerful, competitive system, and even though it’s not as big computationally, its effectiveness is so good that it will be used aggressively until the very last minute.”

Another remarkable aspect of Mira is the number and variety of researchers who have been able to take advantage of the system’s full computational horsepower with massive simulations that require using all of its nodes. Over the course of Mira’s lifespan, ALCF users have performed more than 700 full-machine runs on Mira for studies ranging from cosmology to materials science.

“Many supercomputers typically only do these full-machine runs once early in their lives and never again, but our users have done them routinely on Mira,” said Mark Fahey, director of operations at the ALCF. “The fact that Mira was able to handle these full-machine jobs on a regular basis is a testament to its exceptional reliability. On other large-scale systems, typically a small number of processors are down or go down during full-machine runs, which may discourage users from attempting them in the first place.”

When Mira is decommissioned, Argonne’s current leadership-class supercomputer, Theta, will serve as the lab’s primary system for open science until its forthcoming exascale machine, Aurora, arrives in 2021.

While Mira will be missed by ALCF users and staff alike, the system will continue to have a lasting impact on science even after it is powered down. From advancing research at large-scale experimental facilities and cosmology surveys to accelerating the discovery of new materials and pharmaceutical candidates, Mira has enabled a number of groundbreaking studies that have pushed the boundaries of science across a range of disciplines.

For seven years, Mira has been used to handle scientific problems that range from the miniscule to the cosmic. While scientists at the Large Hadron Collider (LHC) in Switzerland have spent years generating many petabytes of particle collision data from their experiments, researchers back at Argonne have been running simulations on Mira to test how different models of the subatomic universe conform to observations.

“A lot of the things we struggled with before we had the opportunity to run codes on a supercomputer like Mira involved detecting very rare events in simulation,” said Argonne high energy physicist Tom LeCompte, who formerly served as the physics coordinator for the ATLAS experiment at the LHC. “In simulation, extraordinary events quickly resemble ordinary events, so you have to sort through many, many ordinary-looking events to find them.”

The struggle with grid computing – the predecessor to supercomputing for high energy physics applications – was that it could only accommodate so many collisions at once, so that simulated events that certain models put forth as improbable registered instead as nonexistent.

“With Mira it became much easier to see what was going on – you’d see that you weren’t actually encountering failures, it was just so much rarer to see these one-in-a-billion events,” LeCompte said. “If you’re looking for a ‘black swan,’ you had the ability to look at many more white swans before the black swan appeared.”

These black swan events in the simulation are then used to validate or invalidate the data that are being produced by theory and experiment. Mira has given particle physicists the ability to run entire models quickly to determine just how they fail to truly depict reality.

Roughly 150 papers are produced by the LHC every year, of which half are searches for new physics. “As experimenters, what we can do is use these events that were generated on Mira to say, ‘this theory might be true, this other theory is not true, and this third theory is true for a certain set of parameters,” LeCompte said. “We can really test the agreement of data and theory because we understand the backgrounds so much better than before we started looking with Mira.”

Although no model to date fully represents all of the physics being seen experimentally, Mira is helping scientists to develop better theories. “Although it would have been nice to pick a winner right off the bat, Mira allowed us to refine our hypotheses and our models to get closer to a more accurate solution,” LeCompte said. “We generated both a higher number of collisions and higher quality collisions than we had ever done before.”

One of Mira’s other immediate advantages lay in the fact that the code originally written for grid computing applications could be easily adapted to work on the supercomputer. “It was really more of a question of how we could make this run in order to do the best possible science, rather than could we make the code run at all,” LeCompte said.

Mira, in a sense, offered scientists with expertise in specific domains the ability to address computational problems in terms with which they were familiar, rather than having to address a large number of additional computer science challenges merely to get up and running. “I’m a physicist, not a computer scientist, and Mira was the first machine that was generally available that let me think about problems like a physicist,” LeCompte said.

As Mira reaches the end of its useful lifetime, LeCompte reflected that the machine had successfully addressed all the challenges for which it was designed. “Most of the problems that can be solved with a 10-petaflop computer have been solved,” he said. “It’s time to ask the questions that require a 1000-petaflop computer.”

These problems will involve an enormous amount of additional data from the LHC – perhaps as much as a factor of 10 to 20 – which, according to LeCompte, will open up more opportunities for new science.

Just as Mira is being used to focus on some of the deepest mysteries science has to offer, researchers have also used the supercomputer to tackle questions of enormous practical human importance. Scientists led by David Baker, a University of Washington biochemistry professor, have used Mira to look at how different proteins fold in order to come up with new pharmaceutical candidates.

Proteins are made up of amino acids, natural building blocks that form long chains that twist into various configurations. While scientists can determine the sequence of every protein in the human body from the information gleaned from the Human Genome Project, they don’t yet know all the structures. What would be even more useful – and yet more challenging – would be to determine structures and eventually functions of protein-like molecules that use non-natural building blocks.

“Biochemistry is like painting or sculpture,” said Vikram Mulligan, a biochemist at the Flatiron Institute and a collaborator of Baker’s. “Nature gives us a palette that contains only blues, and we want to add something that has a different feel to it, like with reds or greens. Or you might say that nature gives us only clay but we want to make something out of glass or metal that clay would not support.”

By going beyond the chemical groups that are used by living cells, researchers can design new therapies with chemical functionalities that living systems cannot access. “Cells are limited by what is compatible with their chemistry,” Mulligan said.

With natural building blocks, scientists can base therapies around small molecule pharmaceuticals or large antibody proteins. Each of these approaches, however, has drawbacks. Small molecules bind to places they’re not supposed to, causing side effects, while antibody proteins have trouble crossing different barriers within the body and often have to be injected.

The ideal candidate for a pharmaceutical that can be studied on a supercomputer, therefore, is something of an intermediate size. This Goldilocks class of molecules includes macrocycles – smaller proteins that contain internal cross-linkages that create a ring-like structure. Many of the most potent drugs contain these types of macromolecules, and designing new drugs based on their structures provides one of the most fertile proving grounds for scientists, Baker said.

“When we started working on these molecules, we wanted to know how to design something that looked like this,” he said. “We wanted to know if we could get down to smaller sizes or use non-natural building blocks to make things that looked like the most potent drugs we know about but don’t know how to design.”

That’s where Mira’s strengths came into play. For any given sequence of building blocks – natural or synthetic – a protein can fold itself into an astronomical number of configurations. Only one of these conformations, however, will be the lowest-energy state – the position the protein will prefer to remain in for the vast majority of the time.

With relatively low computational cost, Baker and Mulligan were able to make lots of candidate sequences for particular targets. The high computational cost, and the need for a supercomputer like Mira, resulted from the need to evaluate the trillions and trillions of potential conformations to single out the lowest-energy states. “If you want to bind to something, and you need to be in a certain conformation to do it, you want to spend all of your time in that conformation to do it well,” Mulligan said.

After determining virtually which of the cyclic peptide candidates had unique low-energy states, Baker and Mulligan were able to take the most promising handful of designs back to the laboratory to actually synthesize them. “The idea is that this becomes a very fast process, relatively speaking,” Baker said. “Once we’ve identified something on the computer, it may be a matter of a couple of weeks before we’ve made it in the lab.”

Having a resource like Mira means helping bring real therapies closer to reality, Mulligan said. By combining information gathered from Mira with experimental evidence, Mulligan and Baker were able to see that a particular peptide inhibited an enzyme thought to be responsible for antibiotic resistance in a test tube setting. “The idea is that we are getting closer to something that might eventually form the basis of actual drugs,” Mulligan said.

The picture of a molecular structure gleaned from a Mira-based simulation and the analysis of a molecule created in the laboratory match extremely well, Baker said. “What we see on the supercomputer is exactly like what we see in the experiment. We have to do experiments to confirm that our assumptions in our code match reality, and it turns out that they do very well.”

In addition to informing the design of promising drug candidates, Mira has had a major impact on advancing the study of novel materials for a range of applications, such as batteries and catalysts.

The Argonne supercomputer played an instrumental role in making the quantum Monte Carlo (QMC) method a more effective and accessible tool for the computational materials science community. Previously limited by a lack of sufficient computing power, the computationally demanding QMC method is able to accurately calculate the complex interactions between many electrons, providing realistic predictions of materials properties that elude traditional methods like density functional theory.

“Mira really changed the game for QMC,” said Anouar Benali, an Argonne computational scientist and a co-developer of the open-source QMCPACK code. “Before Mira came along, QMC was limited to ‘toy problems’ that involved modeling systems of small atoms or solids. Now, we routinely use the method to perform rigorous calculations on more realistic materials.”

Mira’s massively parallel architecture was particularly well suited for the QMC approach, which requires computing a huge number of samples simultaneously to predict the many-body interactions for a given material. The system enabled researchers to study larger systems in a reasonable amount of time, providing a launching pad for QMC’s application to a wide variety of materials.

For example, Benali, in collaboration with colleagues from Argonne, Oak Ridge, Sandia, and Lawrence Livermore national laboratories, used Mira to carry out QMCPACK simulations that accurately captured the magnetic properties of a titanium oxide material (Ti4O7) for the first time. Their calculations revealed the material’s ground state – its lowest-energy, most stable state -- which is critical to determining many other important properties, such as crystal structure, conductivity, and magnetism.

“The titanium oxide study is just one example of how we used Mira to demonstrate the reliability and efficiency of QMC,” Benali said. “We’ve also successfully applied the method to studies of graphene, a potential cancer drug called ellipticine, and noble gas crystals.”

By enabling these types of studies, Mira has helped QMC become an increasingly popular technique for conducting materials science and chemistry research on the world’s most powerful supercomputers.

An ongoing project led by researchers from Oak Ridge National Laboratory, for example, is now tapping Theta and Oak Ridge’s Summit supercomputer to further advance the use of QMC to better understand, predict, and design functional and quantum materials.

Scientists believe that QMC will be an even more valuable technique in the upcoming exascale era. Both DOE’s Exascale Computing Project and the ALCF’s Aurora Early Science Program are supporting projects that are focused on preparing QMC for the scale and architecture of DOE’s future exascale systems.

“It’s been exciting to see how our work on Mira has helped QMC evolve from a niche tool to a method that is now at the forefront of computational materials science,” Benali said.

Just like in materials discovery and in particle physics, scientists working in cosmology also have to look for ways to reconcile experimentation or observation and simulation. Large sky surveys, such as the Sloan Digital Sky Survey and the upcoming Large Synoptic Survey Telescope (LSST), will assemble an enormous amount of data about the stars and galaxies in the night sky.

The current structure of the universe offers clues as to its distant past, and the process of exploring the evolution of the universe requires a supercomputer like Mira.

Computing the universe is no easy task – it requires a tremendous amount of memory and almost a billion core-hours of processor time to render what Argonne physicist and computational scientist Katrin Heitmann called a “synthetic sky.”

When Mira came online, Heitmann and her colleagues were among the first researchers to leverage the system’s capabilities by carrying out the Outer Rim simulation, one of the largest cosmological simulations ever performed at the time. Using their Hardware/Hybrid Accelerated Cosmology Code (HACC), the team’s massive simulation evolved 1.1 trillion particles to track the distribution of matter in the universe as governed by the fundamental principles of physics, as well as observational input from earlier sky surveys.

The Outer Rim simulation has enabled a number of follow-on studies and has been used to create a major synthetic sky catalog for the LSST Dark Energy Science Collaboration, for which Heitmann serves as the Computing and Simulation Coordinator.

She and her colleagues also combined simulations on Mira with computations carried out on Oak Ridge National Laboratory’s now-retired Titan supercomputer to create the “Mira-Titan Universe.” This simulation suite was developed to provide accurate predictions for a range of cosmological observables and for generating new sky maps.

With each new and increasingly powerful supercomputer deployed at DOE’s national labs, Heitmann and her team have a new tool at their disposal for enhancing the accuracy and scale of their cosmology simulations.

“Leadership supercomputers like Mira and its successors have allowed us to perform more detailed simulations much more quickly than was previously possible,” Heitmann said. “We’re now at a point where our simulations have reached the same size as the surveys carried out by state-of-the-art telescopes. We’re looking forward to what will be possible with Argonne’s exascale system, Aurora.”

But that doesn’t mean Mira will cease to contribute to new cosmology research even after the system is decommissioned. As one of the final users of the supercomputer, Heitmann is leveraging Mira for a massive simulation campaign that reflects cutting-edge observational advances from satellites and telescopes and will form the basis for sky maps used by numerous cosmological surveys.

The new Mira simulations will help researchers address numerous fundamental questions in cosmology and will enable the refinement of existing predictive tools and aid the development of new models. This will impact both ongoing and upcoming surveys, including LSST, the Dark Energy Spectroscopic Instrument (DESI), SPHEREx, and the “Stage-4” ground-based cosmic microwave background experiment (CMB-S4).

“It’s amazing to me that, right before being powered down, this system is still capable of something so useful and expansive,” Riley said. “The research community will be taking advantage of this work for a very long time.”

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science