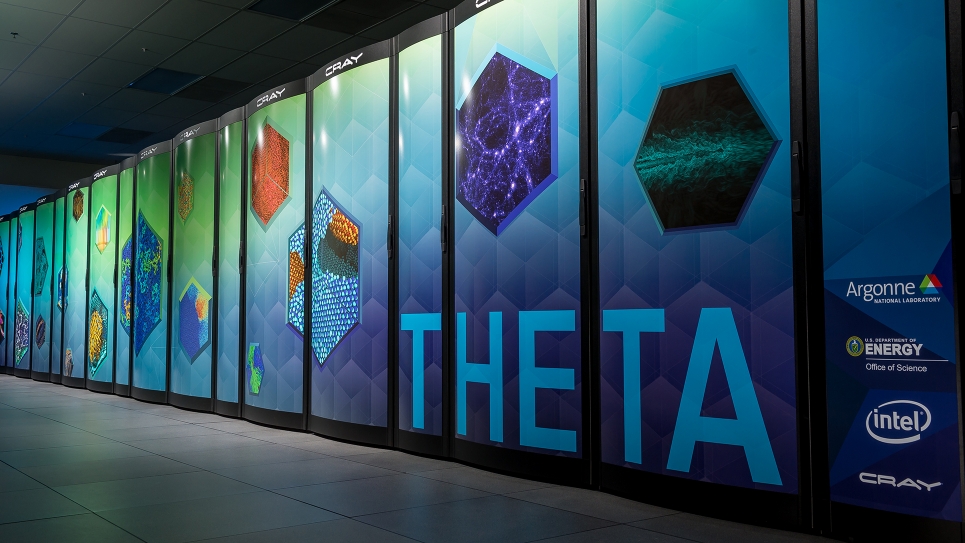

The ALCF's Theta supercomputer enables researchers to accelerate the pace of discovery and innovation across scientific disciplines. (Image: Argonne National Laboratory)

Abstract: MPI’s collective operations are a powerful abstraction found in many HPC programs. By defining the communication pattern of an entire group of processes, collectives allow programmers to gloss over the intricacies of process-to-process messaging. Similarly, the higher-level description gives MPI researchers more flexibility to implement the requested message flow. In this talk, I will describe the complexity of selecting algorithms to implement collectives. I will support this section with data from our new automated collective testing platform. Then, I will describe our solution; a machine learning approach that will predict the optimal algorithm even in untested scenarios. I will conclude with a discussion of future directions for this ongoing project.

Bio: Mike Wilkins is a second year Ph.D. candidate at Northwestern University, co-advised by Dr. Peter Dinda and Dr. Nikos Hardavellas. He also is a visiting student in the programming models/runtime systems group of the MCS division at Argonne, advised by Dr. Min Si. Mike’s past research interests include cache coherency, exactness in scientific computing, and memory usage analysis. Before pursuing graduate school, Mike completed three internships and won Best Senior Thesis while completing his B.S. at Rose-Hulman Institute of Technology.